Microsoft has announced a new update for the Azure AI platform. It includes hallucination and malicious prompt attack detection and mitigation systems. Azure AI customers now have access to new LLM-powered tools that greatly enhance the level of protection against untoward or unintended responses in their AI applications.

Microsoft strengthens Azure AI defenses with hallucination and malicious attack detection

Sarah Bird, Microsoft’s Chief Product Officer of Responsible AI, explains that these safety features will protect the “average” Azure user who may not specialize in identifying or fixing AI vulnerabilities. TheVerge covered it extensively and eluded how these new tools can identify potential vulnerabilities, monitor hallucinations, and block malicious prompts in real time, organizations will gain valuable insight into the performance and security of their AI models.

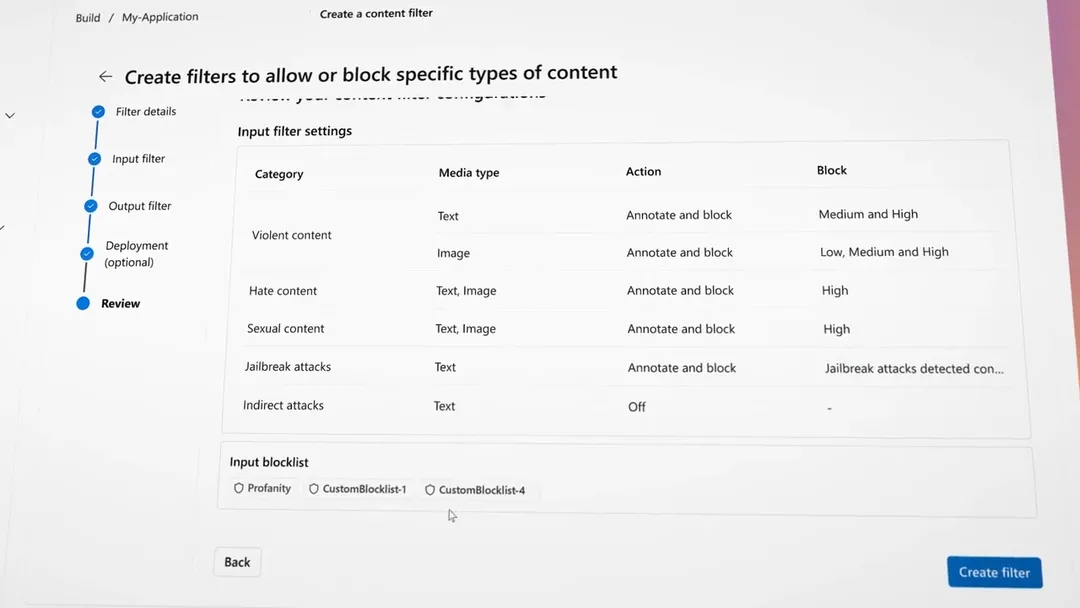

These features include Prompt Shields to prevent prompt injections/malicious prompts, Groundedness Detection for the identification of hallucinations, and Safety Evaluations that rate model vulnerability. While Azure AI already has these attributes on preview, other functionalities like directing models towards safe outputs or tracking potentially problematic users are due for future releases.

One thing that distinguishes Microsoft’s approach is the emphasis on customized control, which lets Azure users toggle filters for hate speech or violence in AI models. This helps with apprehensions regarding bias or inappropriate content, allowing users to adjust safety settings according to their particular requirements.

The monitoring system checks prompts and responses for banned words or hidden prompts before they pass on to the model for processing. This eliminates any chances of making AI produce outputs contrary to desired safety and ethical standards and generating disputed or harmful materials as a result.

Azure AI now rivals GPT-4 and Llama 2 in terms of safety and protection

Though these safety features are readily available with popular models such as GPT-4 and Llama 2, those who use smaller or less known open-source AI systems may be required to manually incorporate them into their models. Nevertheless, Microsoft’s commitment to improving AI safety and security demonstrates its dedication to providing robust and trustworthy AI solutions on Azure.

Microsoft’s efforts in enhancing safety demonstrate a growing interest in AI technology’s responsible use. Microsoft therefore aims at creating a safer and more secure environment where customers can detect and prevent risks before they materialize while using the AI ecosystem in Azure.