The WWDC 2025 visionOS 3 debut will be the first look at what an annual update cycle looks like for Apple Vision Pro software. Here are some of our hopes for the platform.

There is a call for a lighter, cheaper Apple Vision Pro, but Apple can’t provide that through a software update. There are plenty of quality-of-life updates that could help boost the platform.

When Apple Vision Pro launched in February 2024, it ran visionOS 1.0 with Apple’s view of what the platform should be. After a few months of being used by more than just Apple engineers, the company was able to tackle a lot of issues not identified in beta testing.

The visionOS 2 release just a few months after at WWDC 2024 introduced a new way to access Control Center, volume, and other settings. Before, it was literally an eye-rolling experience trying to access necessary controls.

A lot of upcoming changes depend on how Apple views Apple Vision Pro and its level of importance in the lineup. It is focused on getting Apple Intelligence off the ground, not to mention a redesign across the ecosystem, so visionOS may take a back seat in 2025.

Here’s what I’m hoping to see with visionOS 3 during WWDC 2025.

More native Apple-made apps

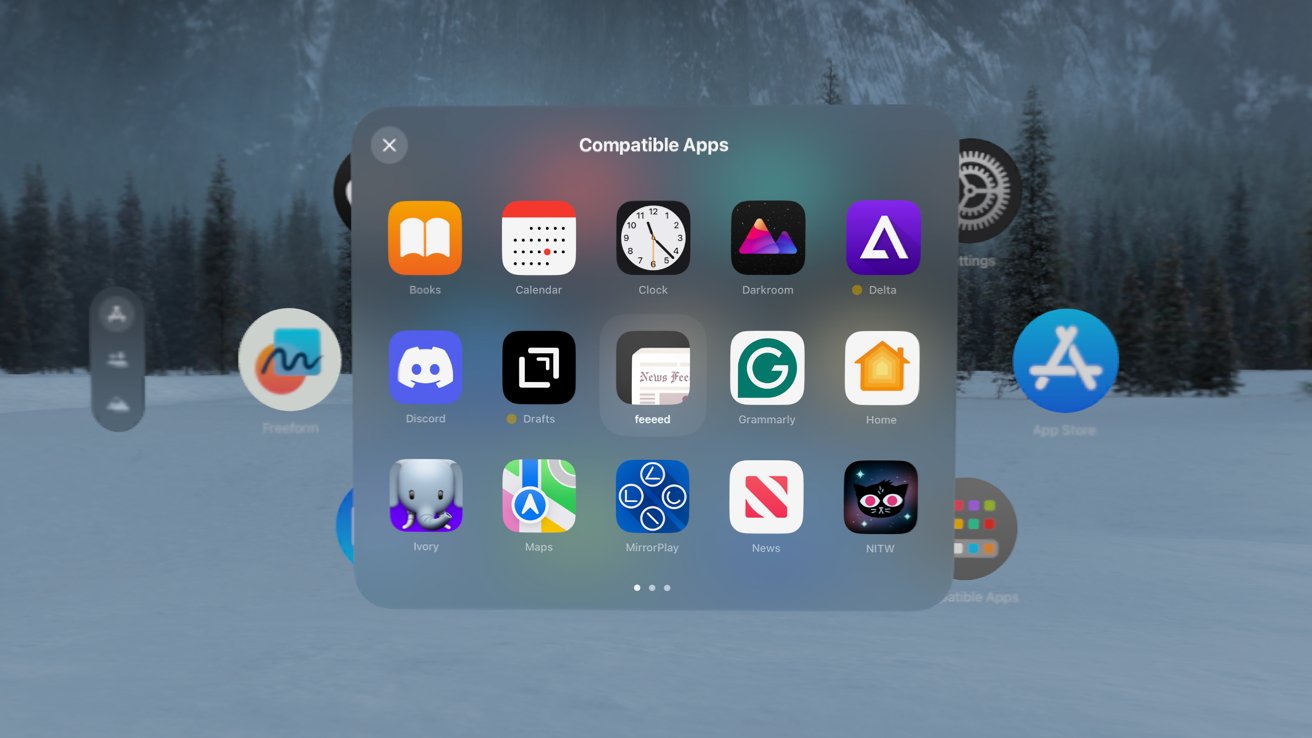

The most basic and obvious item on this wish list is more native apps. Apple hasn’t moved any of its compatible iPad apps to native visionOS apps since the devices launched — though some brand-new native apps have been introduced.

The following apps are still operating in iPad-compatible mode:

- Clock

- Calendar

- Reminders

- Podcasts

- Shortcuts

- Photomator

- Home

- News

- Maps

- Numbers

- Pages

- Books

- Stocks

- Voice Memos

It’s quite the list considering I use nearly all of these apps on a regular basis. Without Apple setting a good example of how it thinks certain apps should look and behave, not many developers have followed suit.

We’re one year into public development of visionOS, but Apple also worked internally on the product for many months prior to the launch. Apple Vision Pro was first revealed two years ago this June, so it seems inexcusable not to have all of Apple’s apps native, even at a bare minimum of design and support.

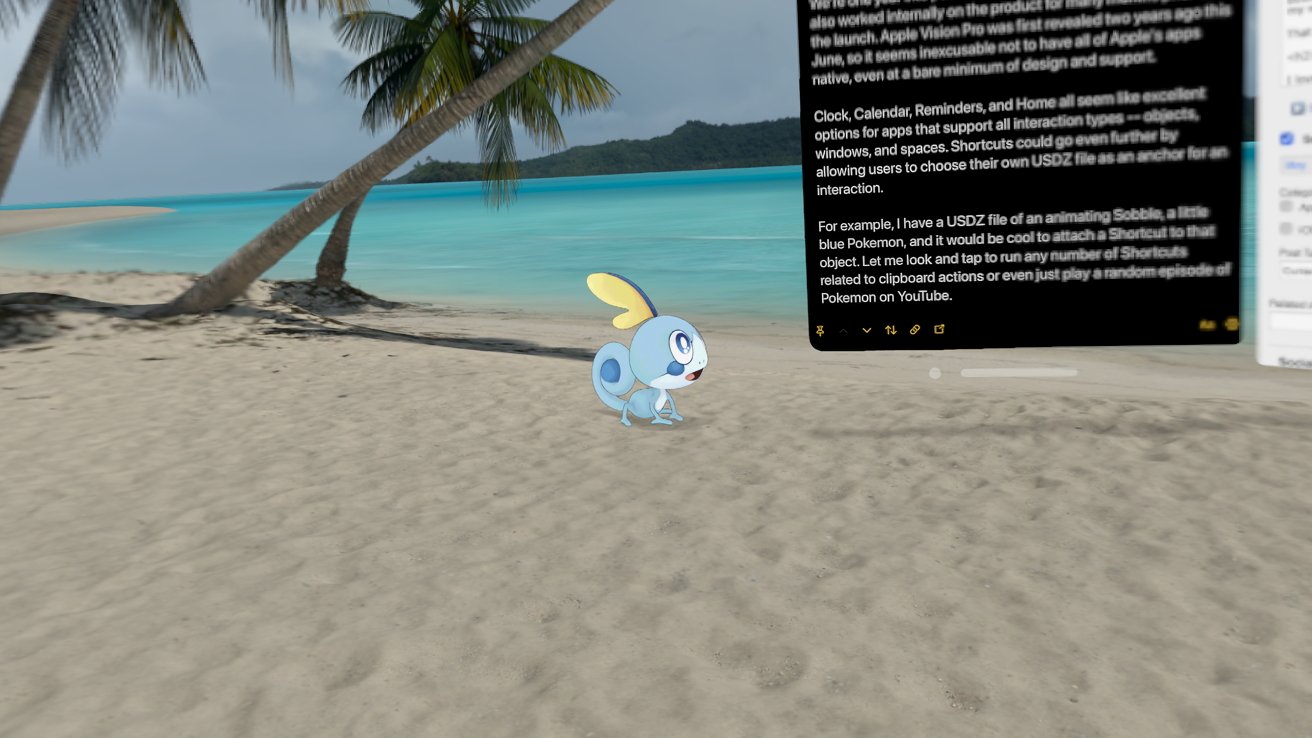

Clock, Calendar, Reminders, and Home all seem like excellent options for apps that support all interaction types — objects, windows, and spaces. Shortcuts could go even further by allowing users to choose their own USDZ file as an anchor for an interaction.

For example, I have a USDZ file of an animating Sobble, a little blue Pokemon, and it would be cool to attach a Shortcut to that object. Let me look and tap to run any number of Shortcuts related to clipboard actions or even just play a random episode of Pokemon on YouTube.

There’s a lot Apple could do with these apps. Let me place a physical book on my desk and tap it to launch reference materials in the Books app, have Reminders show up as little sticky notes I can stick to app windows or objects, or show a virtual thermostat I can set on my wall.

That brings me to my next idea for Apple Vision Pro — immersive environments.

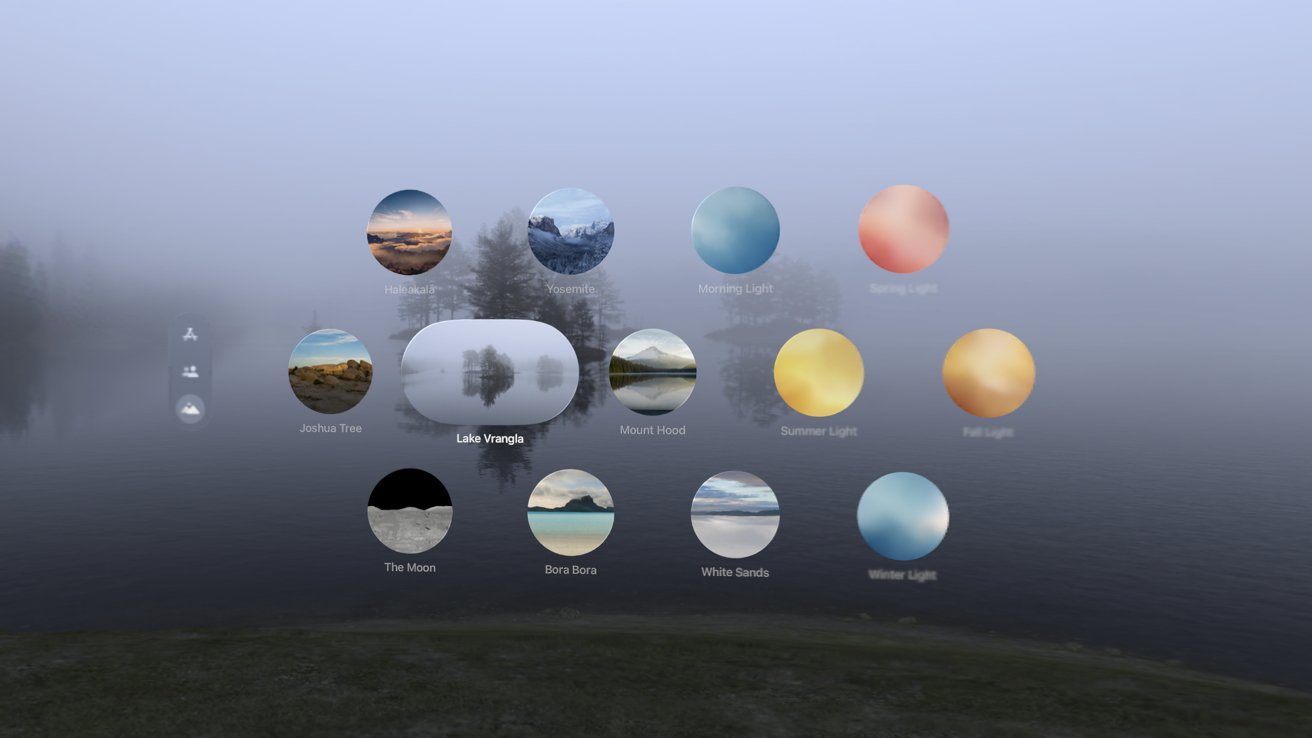

New immersive environments and controls

I love Apple’s immersive environments. You can instantly work on a beach, near a cliff’s edge, or under a massive mountain.

That said, there are a few ways I think Apple should think about these spaces. Clearly, Apple needs to open up immersive environments to third parties in a way that lets them exist outside of apps.

It would also be interesting if users could design and upload their own spaces. Perhaps Apple or a third-party could make an immersive space designer and let users save and use them — think of how wallpapers work today.

There’s not really a good place to mention this, so I’ll stick it here. Apple desperately needs to support detecting third-party keyboards for passing them through environments and anchoring the predictive text bar. Perhaps that can be helped by my next idea.

This may not appeal to anyone but me, and may entirely miss Apple’s planned use of Apple Vision Pro. That said, one of the first things I noticed about working in these virtual environments that is a bit odd is the lack of a desk or physical surfaces.

Now, I’m not saying there needs to be a cubicle environment, though I wouldn’t be against it as a kind of gag. However, I’d love to see the option of adding anchored surfaces to the space.

For example, in my office there are three desks and I know Apple Vision Pro knows where they are and where the walls are behind them. What I’m suggesting is, let me place a virtual desk in the identical physical locations of the real desks and use them to place 3D objects or anchor windows.

Let’s look back at my idea for Apple’s native apps. If Apple Books let me place a virtual book on the desk, I could look to my right and see the book, always anchored to that desk, and select it to open a Books window to a reference page.

I could place other objects on the desktop surfaces, like a physical clock for the Clocks app, a virtual notepad that opens a specific note, etc. You could even take this a step further and anchor a virtual calendar to your desk calendar.

Surfaces don’t have to be limited to tables. Apple should allow users to have a wall in their virtual spaces as well. Imagine if you could look left and see a virtual wall where you can place calendar objects and other widgets.

I envision it a bit like how the video game The Sims treats walls. Let me design a space, put up walls, hide walls, etc. Perhaps the effect could make it seem like I’m in a room at a desk looking out of a floor-to-ceiling window at Bora Bora instead of directly in the sand itself.

Maybe this is getting a little too skeuomorphic, but that seems to be the idea for spatial computing. Imagine when one day this is all viewed through a set of glasses — physical object anchoring and digital representations of objects will all come into play.

That brings me to my next idea.

Permanent anchoring for windows and objects

Apple has improved the Apple Vision Pro’s ability to recall where windows are placed between sessions. It isn’t always perfect, and if you press and hold the Digital Crown to recenter, it can cause chaos.

There needs to be a way to set a kind of permanent anchor using a physical object. This is where Apple’s AirTag 2 technology might come into play, but for now, an iPhone or iPad could play the part.

Let the user enter an anchor reset mode that relies on the U-series chip in iPhone to set the anchor point. That way, the user could set the global anchor based on the iPhone setting in a MagSafe stand at your desk, and always have everything arranged around it in space without issue.

Having a permanent anchor point that is attached using a combination of GPS and precision location means memory and automation become more possible. For example, verify the iPhone anchor point, then tap a Shortcut to enter work mode where all of the windows open to a preset position around the anchor.

Save your anchored positions as a part of Focus Modes too. Also, use different anchors to cause different automations to occur.

For example, if your iPhone is in the bedroom MagSafe mount, have it verify its position as a part of an automation when you run an anchored window setting. So, if you go into Work Focus in the office versus the bedroom, the windows arrange themselves accordingly.

Automation-controlled windowing using physical anchor points could open up a variety of options. If Apple leans heavily into using USB in visionOS, it could mean having virtual windows open and close passively as the user moves around the home.

I imagine this would be interesting as more digital objects become the norm. There could be virtual displays anchored to the wall, plant care instructions attached to plants throughout the home, Home controls for fans, lights, and more anchored to the object.

The possibilities are endless.

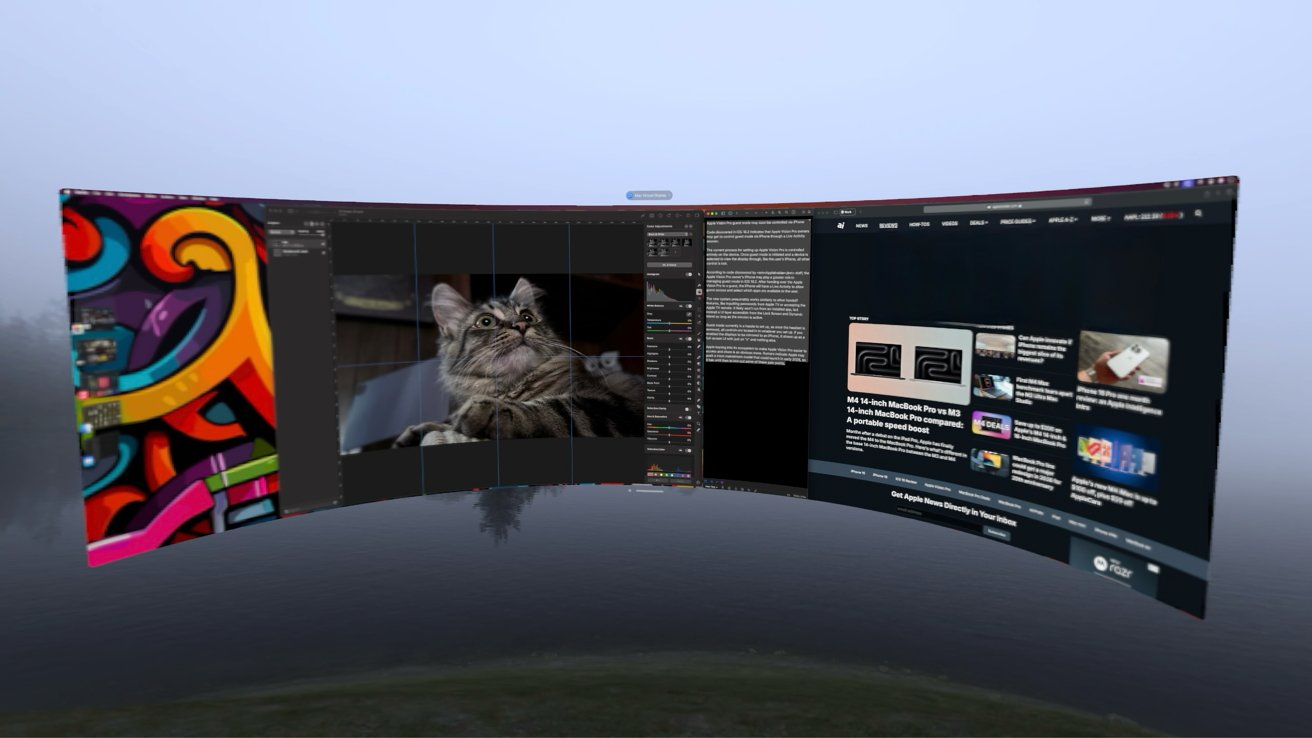

Mac Virtual Display updates

Coming back down to more grounded concepts, Apple could do some interesting work with Mac Virtual Display. The Ultra Wide display settings are an incredible upgrade to the system, but there’s always more that can be done.

Listeners of the AppleInsider Podcast paid “+” segment will have heard me mention this one in passing. Apple could enable users to drag apps out of the virtual environment and treat them as free-floating apps within visionOS.

Now, of course, the UI would still likely rely heavily on precision pointing via a mouse, but I’ll get to eye tracking in a moment. There’s another tidbit I think helps bring this feature home.

There are rumors that suggest Apple is going to redesign all of its operating systems to have a more uniform visionOS-inspired design. Whatever that means for macOS 16, it could help make apps behave better within a visionOS environment.

I do believe that no matter how powerful visionOS gets or how popular Apple’s inevitable smart glasses are, the Mac will persevere. There’s no reason to expect Apple Vision Pro, or glasses especially, to be powerful enough for all pro-level tasks.

So, imagine if you could run the Mac virtual environment then pull programs into the spatial computing space. Full Xcode, Final Cut Pro, or other apps navigable via an attached mouse on a near-infinite display.

Perhaps the Mac virtual app window will gain some UI tweaks that let it be easier to navigate via look and pinching too.

I believe this is a smart middle-step that makes both ecosystems stronger. Rather than forcing a paradigm onto the platform that it can’t or shouldn’t handle, have it work with the hardware that’s already optimized for it.

We’ve seen such mistakes made with iPad. Too often the discussion was around how iPad could replace Mac rather than discussing how it could augment the Mac.

Just because the iPad and Apple Vision Pro are a future of computing doesn’t mean they have to be the only computing endpoint. Apple’s products work best when they work together.

It’s something I’ve posited before — Apple Vision Pro is an expensive product and shouldn’t be upgraded constantly at this price. So, if an M4 Mac is brought in to augment the M2 Vision Pro, that’s just a strength of the ecosystem.

Improved eye tracking

Eye tracking technology is core to the Apple Vision Pro experience. On the scale of things, it is the least accurate and most prone to error versus the large sticky iPadOS cursor and the slim, precise macOS pointer.

There is an issue where no matter how strongly you look at a selection in visionOS, you inevitably tap something above, below, or in an adjacent app instead. There needs to be some level of specificity and control with a cursor, and our eyes just don’t seem to be enough.

I’m not a coding engineer, but there’s surely a solution. One option I’d offer is the ability to look, then tap and hold, then scroll between selection points using the same scrolling gesture for Windows. You then release the tap to select.

Whatever the solution, there are too many colliding operations on Apple Vision Pro. App buttons collide with window adjustments, and too often you inadvertently close a window when you were trying to grab an adjustment bar.

The issue worsens on the web in Safari or within any compatible iPad app. Places where Apple Vision Pro isn’t thought of as the primary interaction tool fall down constantly in this.

I expect Apple will have some solution, or at the least, more precise eye tracking via software upgrades and algorithms.

Other possible updates

I’ll wrap up this wish list with two more obvious picks. First, Apple needs to get back to work on Personas.

Personas haven’t improved much since the initial run where Tim Chaten and I recorded a podcast this way

The slightly off-putting personas are well within the uncanny valley, so I’m not sure what the solution is. Either Apple could try to make them more realistic and animated, or they could roll back to something more akin to Memoji.

Personally, I’d love to see an option to just use a Memoji as a Persona.

In any case, the scanned then superimposed features is the wrong way to go. Apple should use the data to create an avatar based on your features rather than depth mapping your face.

Video games got this right ages ago. Some games even used primitive photo recognition to pre-adjust sliders. Apple Vision Pro’s 3D cameras and scanners are much more advanced and could easily create a better solution.

My recommendation here would be to walk the line between Pixar-like detail in hair, skin, and clothing, but not going so far as to make the avatar look like a Call of Duty cutscene.

By making the avatar more of a 3D representation instead of a mold, it would open up the opportunity for better customization. Let users choose clothing, hairstyles, or even just go fantastical with new eye colors and skin tones.

Yes, Personas should go full video game. More professional human-like defaults for office settings, or a custom avatar for Dungeons & Dragons over Apple Vision Pro.

Speaking of video games, Apple needs to bring third-party controller support to Apple Vision Pro. PlayStation VR2 controllers might need to be something Apple works out with Sony distribution-wise, but it is a no-brainer feature.

Apple should also work with a controller maker to design a bespoke Apple Vision Pro controller. The people over at Surreal Touch would make great partners, or perhaps an acquisition.

And speaking of gaming, there’s already data connectors where the developer strap plugs in. There is absolutely no reason why that can’t be used for passing 3D gaming from a PC or a Mac to the Apple Vision Pro, like the Valve Index does.

Ship a new strap at WWDC, Apple. Let it be used for wider compatibility with the larger VR platform — the PC.

Apple Vision Pro is a well-made piece of hardware, but that doesn’t make it perfect. Software and operating systems can’t be developed in a vacuum, so it will take many more years to perfect visionOS.

Here’s hoping for some surprises at WWDC 2025 with visionOS 3.