Google I/O is the company’s biggest event of the year. Even the launch of the Pixel 10 series later in 2025 won’t compare in size and scope to I/O. This week, I was lucky enough to attend I/O on the steps of Google’s Mountain View headquarters, and it was a total blast.

Unfortunately, people who watch the keynote livestream at home don’t get to experience what happens after Sundar Pichai exits the stage. That’s when I/O gets incredibly fun, because you can wander around the grounds and actually try out many of the upcoming tech and services Googlers just gushed about during the main event.

Here, I want to tell you about the five coolest things I was lucky enough to try! It’s not the same as being there, but it will have to do for now.

Android XR prototype glasses

Lanh Nguyen / Android Authority

At Google I/O 2024, we first saw hints of a new set of AR glasses. Based around something called Project Astra, the glasses seemed like the modern evolution of Google Glass, the doomed smart glasses the company tried to launch over 10 years ago. All we saw during the 2024 demo, though, was a pre-recorded first-person-view clip of a prototype of these glasses doing cool things around an office, such as identifying objects, remembering where an object once was, and extrapolating complex ideas from simple drawings. Later that year, we found out that these glasses run on something called Android XR, and we actually got to see the wearable prototype in promotional videos supplied by Google.

These glasses were hinted at during I/O 2024, but now I got to see them on stage and even wear them myself.

This week, Google not only showed off the glasses in real-world on-stage demos, but even gave I/O attendees the chance to try them on. I was able to demo them, and I gotta say: I’m impressed.

Lanh Nguyen / Android Authority

First, nearly everything Google showed on stage this year worked for me during my demo. I was able to look at objects and converse with Gemini about them, both seeing its responses in text form on the tiny display and hearing the responses blasted into my ears from the speakers in the glasses’ stems. I was also able to see turn-by-turn navigation instructions and smartphone notifications. I could even take photos with a quick tap on the right stem (although I’m sure they are not great, considering how tiny the camera sensor is).

Nearly everything Google showed during the keynote worked during my hands-on demo. That’s a rarity!

Although the glasses support a live translation feature, I didn’t get to try that out. This is likely due to translation not working quite as expected during the keynote. But hey, they’re prototypes — that’s just how it goes, sometimes.

The one disappointing thing was that the imagery only appeared in my right eye. If I closed my right eye, the imagery vanished, meaning these glasses don’t project onto both eyes simultaneously. A Google rep explained to me that this is by design. Android XR can support devices with two screens, one screen, or even no screens. It just so happened that these prototype glasses were single-screen units. In other words, models that actually hit retail might have dual-screen support or might not, so keep that in mind for the future.

Unfortunately, the prototype glasses only have one display, so if you close your right eye, all projections vanish.

Regardless, the glasses worked well, felt good on my face, and have the pedigree of Google, Samsung, and Qualcomm behind them (Google developing software, Samsung developing hardware, and Qualcomm providing silicon). Honestly, it was so exciting to use them and immediately see that these are not Glass 2.0 but a fully realized wearable product.

Hopefully, we’ll learn more about when these glasses (or those offered by XREAL, Warby Parker, and Gentle Monster) will actually launch, how much they’ll cost, and in what areas they’ll be available. I just hope we won’t need to wait until Google I/O 2026 for that information.

Project Moohan

Lanh Nguyen / Android Authority

The prototype AR glasses weren’t the only Android XR wearables available at Google I/O. Although we’ve known about Project Moohan for a while now, very few people have actually been able to test out Samsung’s premium VR headset. Now, I am in that small group of folks.

The first thing I noticed about Project Moohan when I put it on my head was how premium and polished the headset is. This is not some first-run demo with a lot of rough edges to smooth out. If I didn’t know better, I would have assumed this headset was retail-ready — they’re that good.

Project Moohan already feels complete. If it hit store shelves tomorrow, I would buy it.

The headset fit well and had a good weight balance, so the front didn’t feel heavier than the back. The battery pack not being in the headset itself had a big part in this, but having a cable running down my torso and a battery pack in my pocket was less annoying than I thought it would be. What was most important was that I felt I could wear this headset for hours and still be comfortable, which you cannot say about every headset out there.

Lanh Nguyen / Android Authority

Once I had Project Moohan on, it was a stunning experience. The displays inside automatically adjusted themselves for my pupillary distance, which was very convenient. And the visual fidelity was incredible: I had a full color view of the real world, low-latency, and none of the blurriness I’ve experienced with other VR systems.

The display fidelity of Project Moohan was some of the best I’ve ever experienced with similar headsets.

It was also exceptionally easy to control the headset using my hands. With Project Moohan, no controllers are needed. You can control everything using palm gestures, finger pinches, and hand swipes. It was super intuitive, and I found myself comfortable with the operating system in mere minutes.

Of course, Gemini is the star here. There’s a button on the top right of Project Moohan that launches Gemini from any place within Android XR. Once launched, you can give commands, ask questions, or just have a relaxed conversation. Gemini understands what’s on your screen, too, so you can chat with it about whatever it is you’re doing. A Google rep told me how they use this for gaming: if they come across a difficult part of a game, they ask Gemini for help, and it will pull up tutorials or guides without ever needing to leave the game.

Lanh Nguyen / Android Authority

Speaking of gaming, Project Moohan supports all manner of controllers. You’ll be able to use traditional VR controllers or even something simpler like an Xbox controller. I wasn’t able to try this out during my short demo, but it made me very excited about this becoming a true gaming powerhouse.

I didn’t get to try it, but Google assured me that Project Moohan will support most gaming controllers, making me very excited about this becoming my new way to game.

The fatal flaw here, though, is the same one we have with the Android XR glasses: we have no idea when Project Moohan is actually coming out. We don’t even know its true commercial name! Google and Samsung say it is coming this year, but I’m skeptical considering how long it’s been since we first saw the project announced and how little headway we’ve made since (the United States’ tariff situation doesn’t help, either). Still, whenever these do land, I will be first in line to get them.

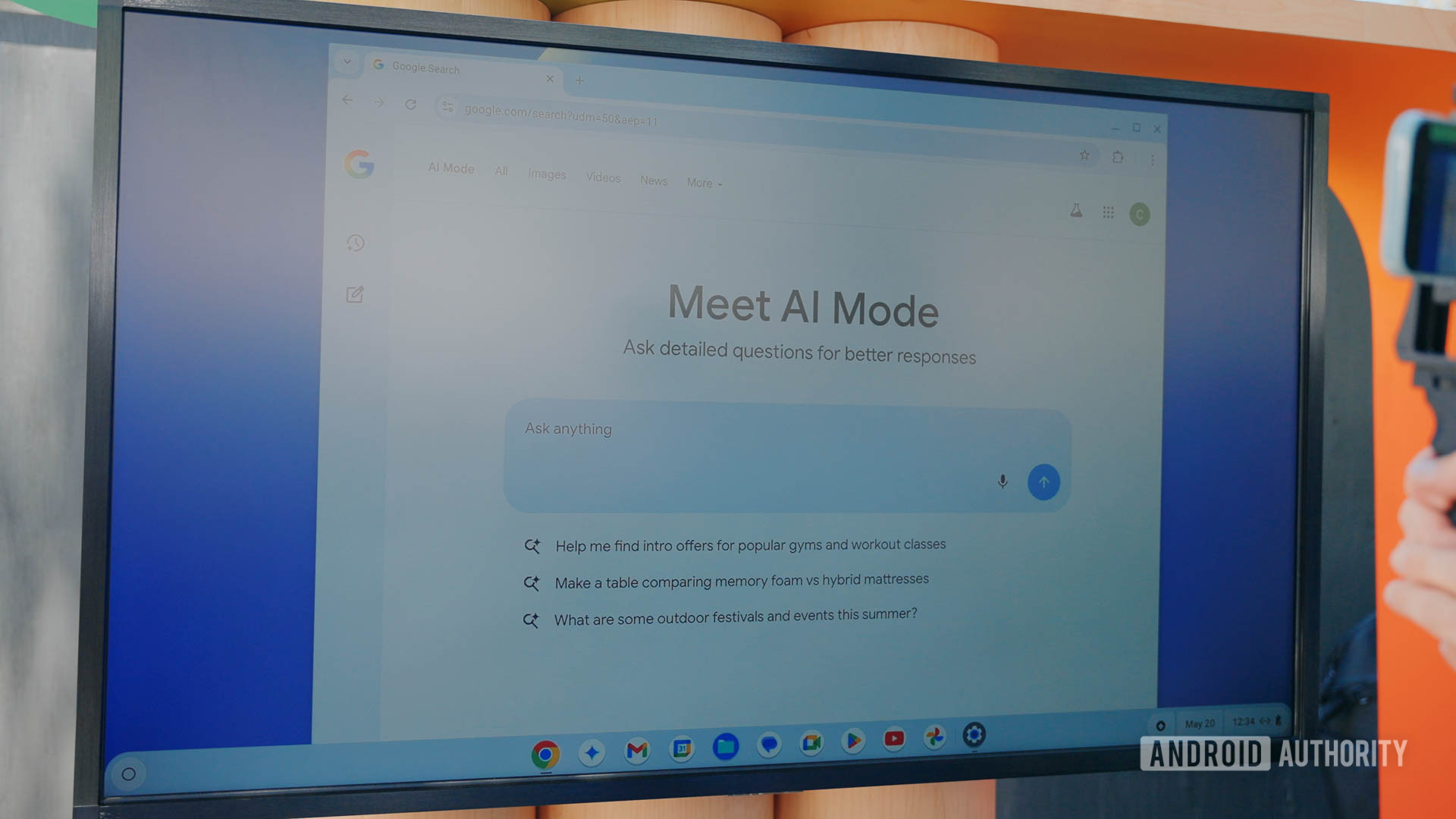

AI Mode in Chrome and Search Live

Lanh Nguyen / Android Authority

Moving away from hardware, AI Mode was another star of Google I/O 2025. Think of this as Google Search on AI steroids. Instead of typing in one query and getting back a list of results surrounding it, you can give much more complex queries and get a unique page of results based on multiple searches to provide you with an easy-to-understand overview of what you’re trying to investigate.

AI Mode allows you to do multiple Google searches at once with prompts as long as you need them to be.

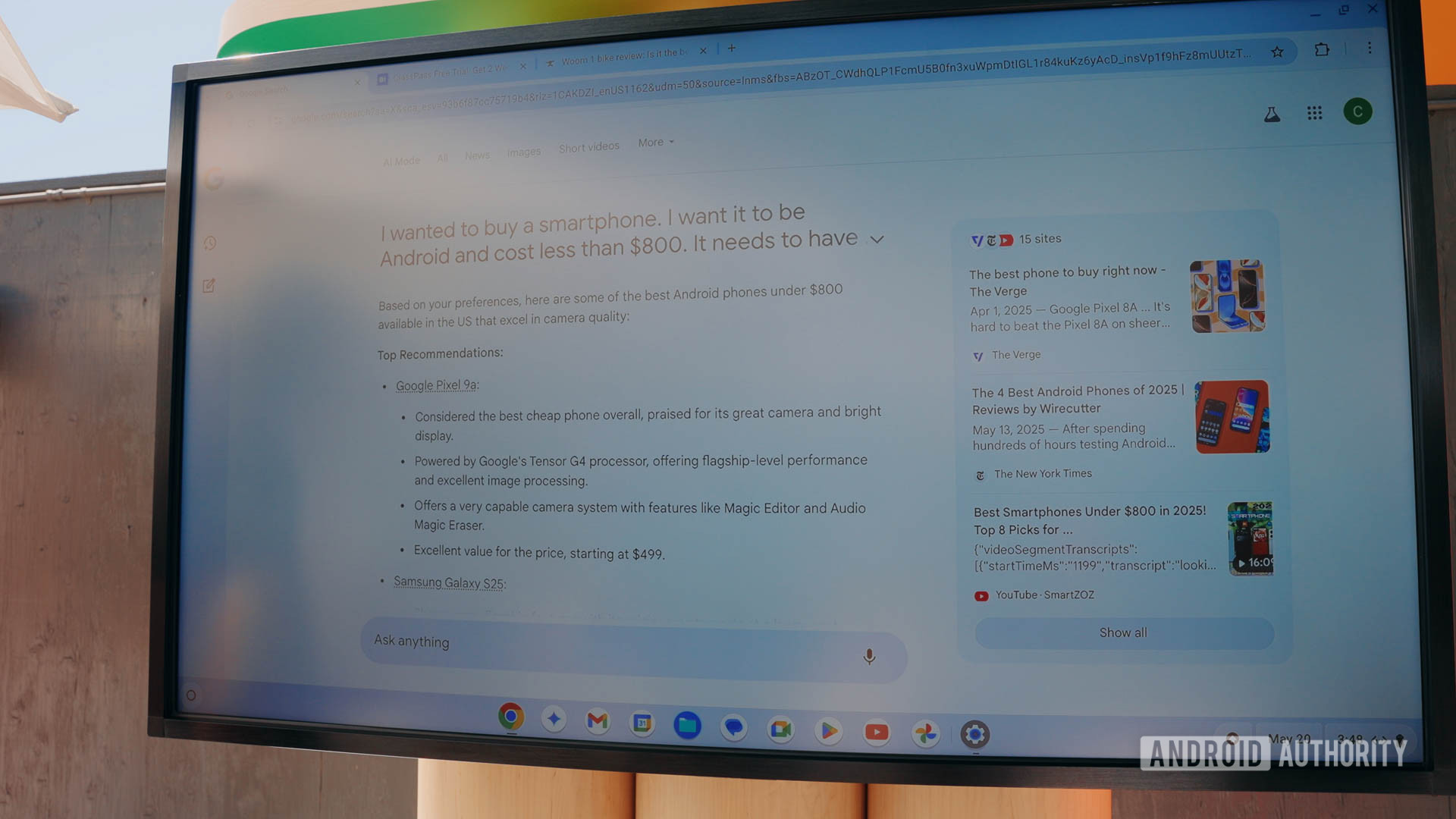

For example, I used it to hunt for a new smartphone. I typed in a pretty long query about how the phone needed to run Android (obviously), cost less than $800, have a good camera, and be available in the United States. Normally, a simple Google search wouldn’t work for this, but with AI Mode, it got right down to it. It returned a page to me filled with good suggestions for phones, including the Pixel 9a, the Galaxy S25, and the OnePlus 13R — all incredibly solid choices. It even included buy links, YouTube reviews, articles, and more.

Lanh Nguyen / Android Authority

The cool thing about AI Mode is that you don’t need to wait to try it for yourself. If you live in the US and have Search Labs enabled, you should already have access to AI Mode at Google.com (if you don’t, you’ll have it soon).

Lanh Nguyen / Android Authority

One thing you can’t try out in AI Mode yet, though, is Search Live. This was announced during the keynote and is coming later this summer. Essentially, Search Live allows you to share with Gemini what’s going on in the real world around you through your smartphone camera. If this sounds familiar, that’s because Gemini Live on Android already supports this. With Search Live, though, it will work on any mobile device through the Google app, allowing iPhone users to get in on the fun, too.

With AI Mode you’ll also eventually find Search Live, which allows you to show Gemini the real world using your phone’s camera.

I tried out an early version of Search Live, and it worked just as well as Gemini Live. It will be great for this to be available to everyone everywhere, as it is a very useful tool. However, Google is in dangerous “feature creep” territory now, so hopefully it doesn’t let things get too confusing for consumers about where they need to go to get this service.

Flow

Lanh Nguyen / Android Authority

Of everything I saw at Google I/O this year, Flow was the one that left me the most conflicted. Obviously, I think it’s super cool (otherwise it wouldn’t be on this list), but I also think it’s kind of frightening.

Flow is a new filmmaking tool that allows you to easily generate video clips in succession and then edit those clips on a traditional timeline. For example, this could allow you to create an entire film scene by scene using nothing but text prompts. When you generate a clip, you can tweak it with additional prompts or even extend it to get more out of your original creation.

Flow could be a terrific new filmmaking tool, or the death of film as we know it.

What’s more, Flow will also incorporate Veo 3, Google’s latest iteration of its video generation system. Veo 3 allows one to create moving images along with music, sound effects, and even spoken dialogue. This makes Flow a tool that could allow you to create a full film out of thin air.

Lanh Nguyen / Android Authority

Using Flow during my demo was so easy. I created some clips of a bugdroid having spaghetti with a cat, and it came out hilarious and cute. I was able to edit the clip, add more clips to it, and extend clips with a few mouse clicks and some more text prompts.

Flow was easy to use, understand, and it worked well enough, but I couldn’t help but wonder why it needs to exist at all.

I didn’t get to try out Veo 3 during my demo, unfortunately. This wasn’t because of a limitation of the system but of time: it takes up to 10 minutes for Veo 3 to create clips. Even if I only made two clips, it would push my demo time beyond what’s reasonable for an event the size of Google I/O.

When I exited the Flow demo, I couldn’t help but think about Google’s The Wizard of Oz remake for Sphere in Las Vegas. Obviously, Flow isn’t going to have people recreating classic films using AI, but it does have the same problematic air to it. Flow left me feeling elated at how cool it is and dismayed by how unnecessary it is.

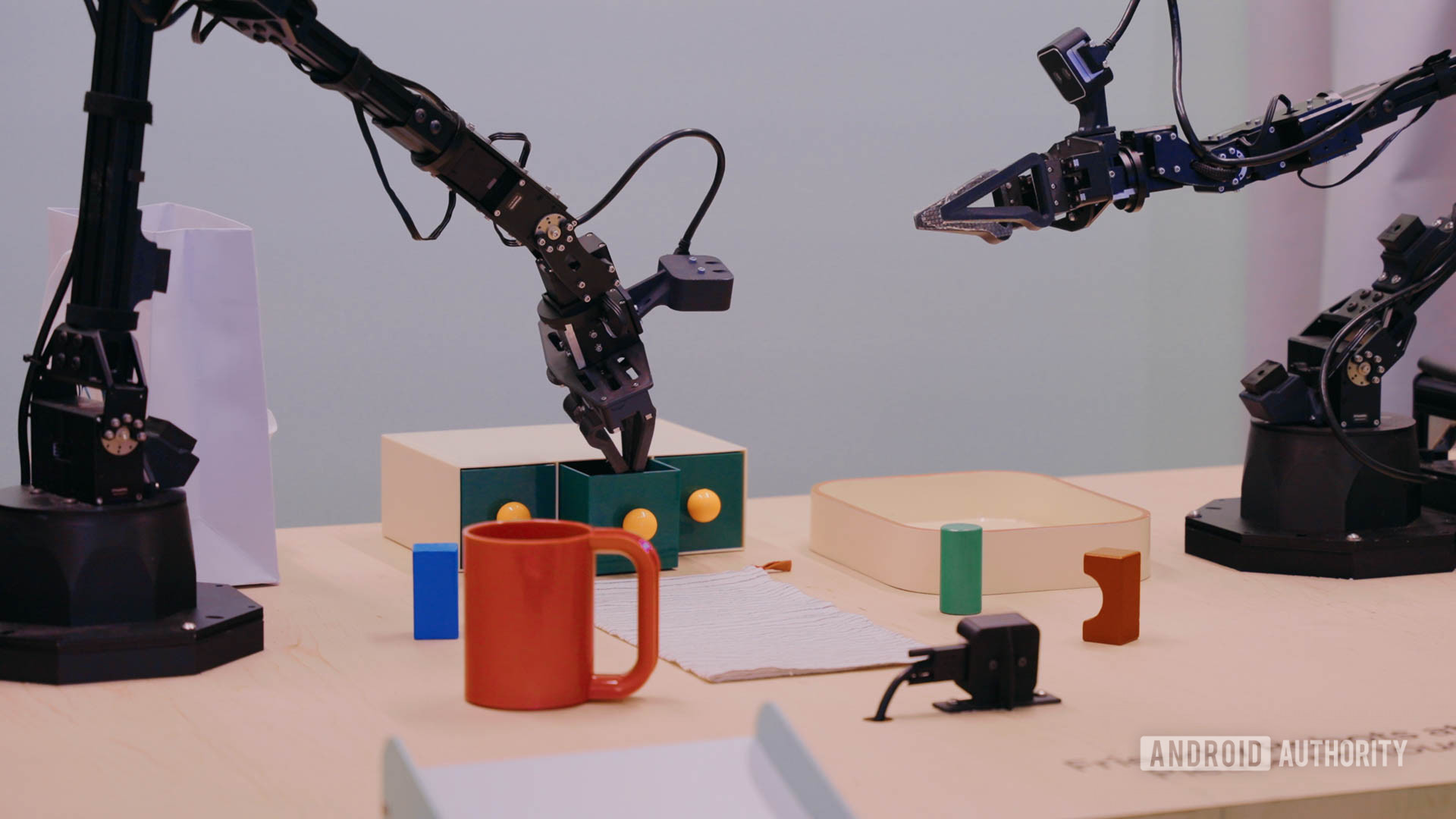

Aloha robotics

Lanh Nguyen / Android Authority

Everything I’ve talked about here so far is something you will actually be able to use one day. This last one, though, will not likely be something you’ll have in your home any time soon. At Google I/O, I got to control two robot arms using only voice commands to Gemini.

The two arms towered over various objects on a table. By speaking into a microphone, I could tell Gemini to pick up an object, move objects, place objects into other objects, etc., and only use natural language to do it.

It’s not every day I get to tell robots what to do!

The robot arms weren’t perfect. If I had them pick up an object and put it into a container, they would do it — but then they wouldn’t stop. The arms would continue to pick up more objects and try to dump them into the container. Also, the arms couldn’t do multiple tasks in one command. For example, I couldn’t have them put an object into a container and then pick up the container and dump out the object. That’s two actions and would require two commands.

Still, this is the first time in my life that I’ve been able to control robots using nothing but my voice. That is basically the very definition of “cool.”

Those were the coolest things I tried at I/O this year. Which one was your favorite? Let me know in the comments!