Apple doesn’t have as many Apple Intelligence features to announce this year at WWDC25. The company spent much of the last twelve months implementing the features it had announced last June. A major project, the new Siri, was given new management as recently as March.

However, there will still be a handful of AI features coming. Apple plans to announce Apple Intelligence-generated Shortcuts automations, an Apple Intelligence API for developers and AI-powered health tips. The Foundation model itself will also be improved, with versions in four different sizes currently in testing.

Here’s what to expect on Apple Intelligence next Monday.

Apple Intelligence may not be a big focus at WWDC25

Photo: Apple

Apple first introduced its own suite of AI features under the branding “Apple Intelligence” last year at WWDC24. Apple entered the hotly competitive and highly contentious AI landscape with practical features Apple says anyone can use.

After Apple announced Writing Tools, Image Playground, Genmoji, Visual Intelligence and more, the company has taken most of the following year finishing and shipping those features in iOS 18.1, 18.2, 18.3 and 18.4. Resources have not allowed another big sweep of features to be ready for this year’s updates.

That would leave this year’s keynote without a blockbuster segment at the end. 2023 and 2024 dedicated nearly 40 minutes of runtime each to introducing Vision Pro and Apple Intelligence, respectively. It seems 2025 will be more business as usual, with only a handful of new features and other iterative improvements.

So what Apple Intelligence features can we expect?

Apple Intelligence-powered Shortcuts

Screenshot: D. Griffin Jones/Cult of Mac

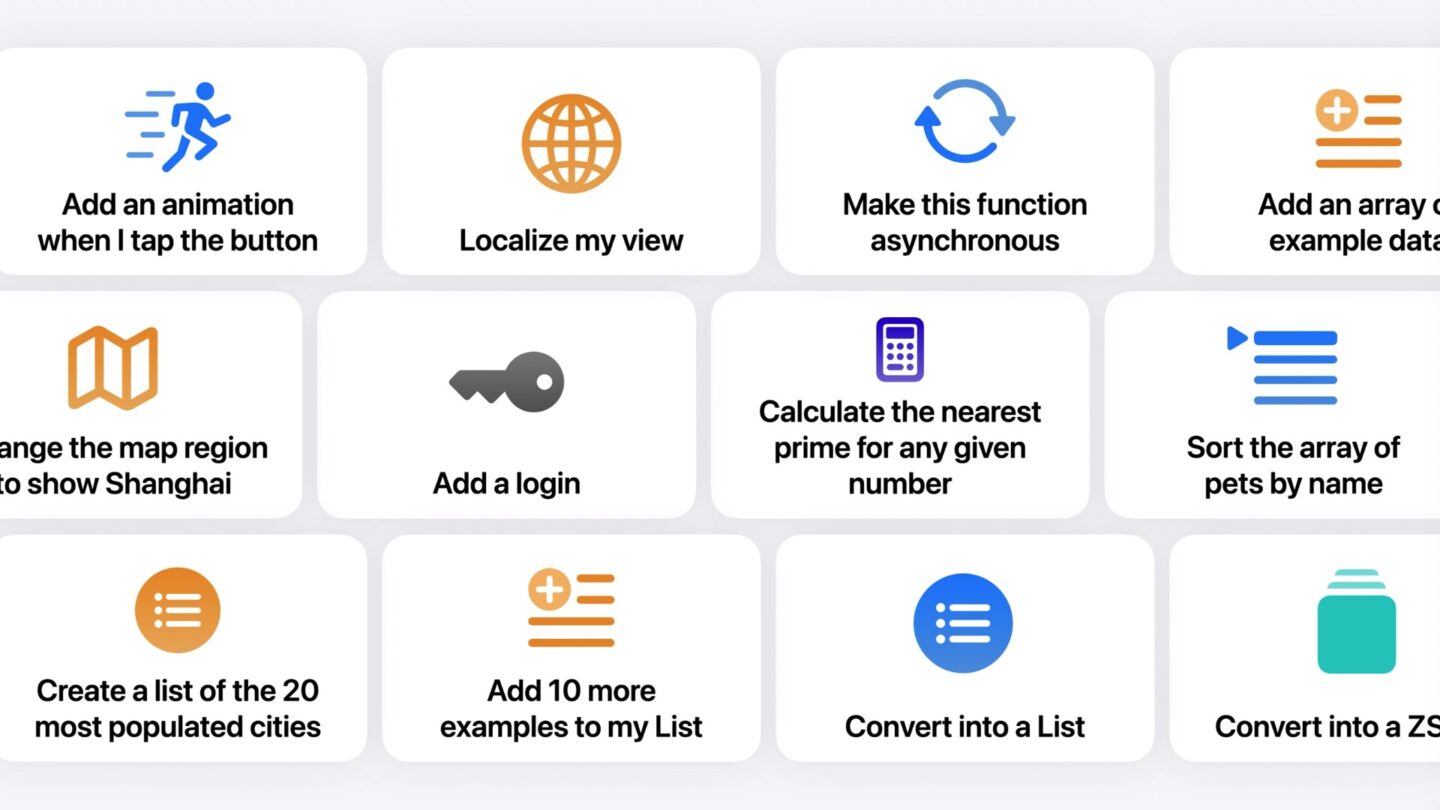

Apple will update Shortcuts, the app that lets users build automations by visually piecing together actions that can control apps on your devices, with Apple Intelligence.

According to Bloomberg’s Mark Gurman in his Power On newsletter, “the new version will let consumers create those actions using Apple Intelligence models.”

Creating a Shortcut can be a intimidating process to newcomers and tedious to experienced users. It would be a significant upgrade if you could describe in plain language what you want, and for Apple Intelligence to create the automation for you.

App Intents, the underlying API that powers Shortcuts, was also going to power a smarter Siri that could take a command and instantly carry it out on your behalf. The feature was previously announced at WWDC24 but delayed in March 2025 to “the coming year.” That means it may be reannounced on Monday.

An API for developers

Image: Apple

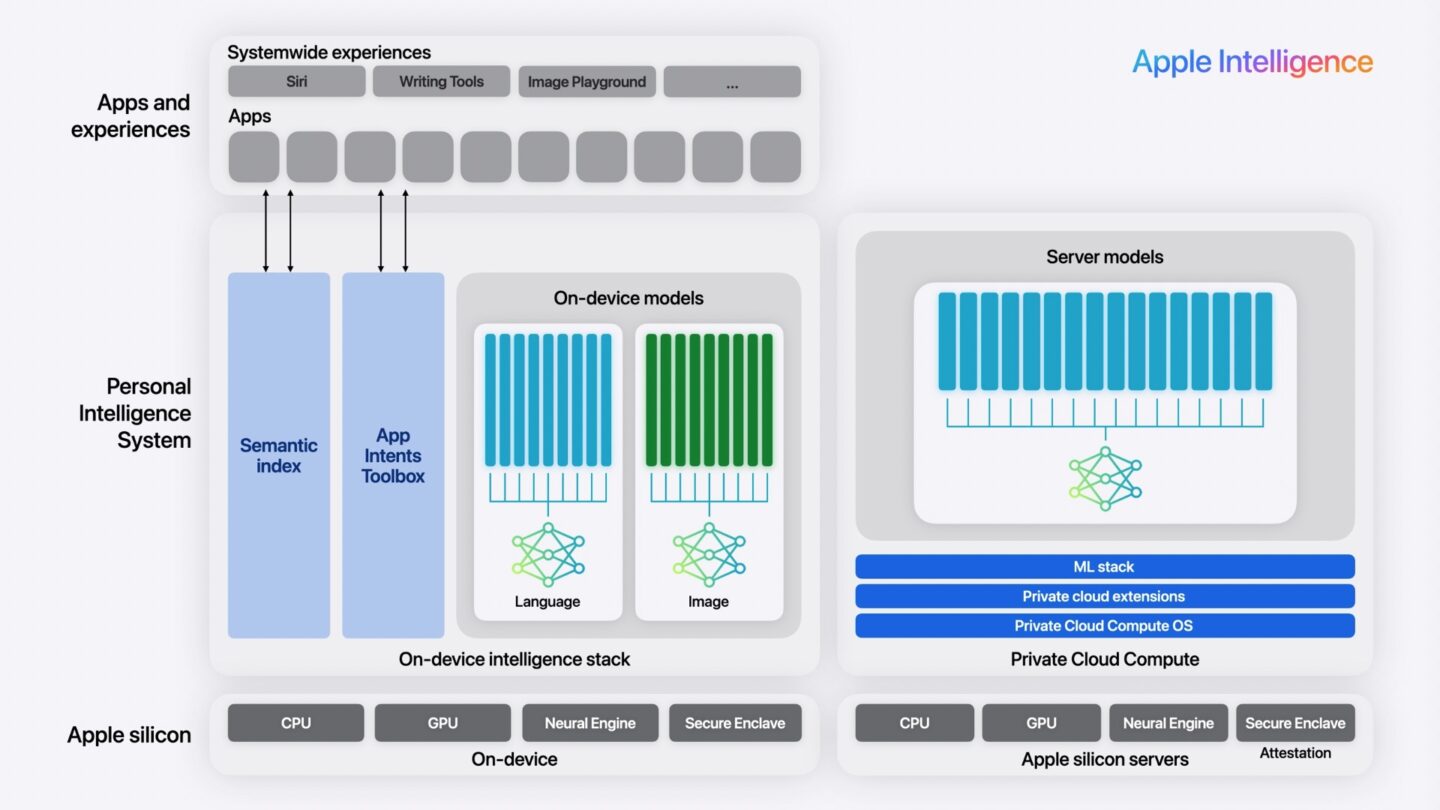

In iOS 18, developers have a limited set of APIs that let them add Apple’s Writing Tools or Image Playground into their apps, but not much else. The big announcement this year is that developers will be able to use Apple Foundation Model APIs directly.

If a developer wants to add AI features in their app, like audio transcriptions or text summaries, they have to use a third-party model. That means either paying API fees to another company, like Anthropic or OpenAI; or adding a small open-source model inside the app, which can reduce the quality, bloat the app size and slow down performance.

Giving developers the ability to use Apple Intelligence would have many benefits. Developers could add AI features without paying exhorbitant costs — because Apple Intelligence largely runs on-device. Developers wouldn’t have to integrate a third-party model into their app, instead using a convenient API provided by Apple.

This would allow many more third-party apps you use, from AirBNB to Impulse to Paprika to NetNewsWire, the ability to build custom AI features at a much lower cost.

AI-powered health coach

Image: Apple

Project Mulberry is the internal codename for an AI coach that will analyze the information in the Health app to provide personalized recommendations. Apple is training this AI agent with data from its in-house physicians. According to Bloomberg, “the project remains deep in development” and will be released in spring 2026. As such, it may not be announced at WWDC — and if it is, it will probably be qualified as “coming later.”

This will be part of a bigger redesign to the Health app. Apple plans to make the app easier to navigate and understand, and add educational videos inside the app on topics like sleep, nutrition, physical therapy and mental health.

Apple’s Foundation model is getting better

Image: Apple

According to Bloomberg, Apple is testing several new versions of its Foundation model: “Versions with 3 billion, 7 billion, 33 billion and 150 billion parameters are now in active use.” The largest model would run via Private Cloud Compute, Apple’s cloud AI servers.

While the latest Foundation models apparently keep pace with recent versions of OpenAI’s models, the company refuses to release a ChatGPT-like conversational chatbot “due to concerns over hallucinations and philosophical differences among company executives.”

However, improvements to the Foundation model will improve the quality of every other Apple Intelligence feature. The Writing Tools will produce higher-quality results, notifications will more accurately summarize notifications, etc.

Siri features remain in development hell

Image: Apple

Apple is still working on LLM Siri, the ground-up redo of Apple’s much-maligned voice assistant. But it probably won’t be ready this year, having recently been put under new management. “The hope is to finally give Siri a conversational interface,” according to Bloomberg.

A new feature, dubbed “Knowledge,” is a tool “that can pull in data from the open web,” like ChatGPT. This would likely answer open-ended questions you ask Siri by searching online, processing the top answers and reading you a summary of what it finds.

Robby Walker is leading the development of Knowledge, the very same person who fumbled the ball on Siri before Mike Rockwell took over. Rumor has it that it’s “already been plagued by some of the same problems that delayed the Siri overhaul.”

More miscellaneous AI features

Image: Apple

Apple will introduce a new low-power mode, which will use AI to “understand trends and make predictions for when it should lower the power draw of certain applications or features.”

The Translate feature, now integrated into AirPods and Siri, may undergo a redesign or rebranding in some way.

Apple is still developing Swift Assist, the programming tool for developers that would be able to write new functions or refactor code. “The company is expected to provide an update” on when it will arrive, Gurman remarks. Internally, Apple engineers use a tool powered by Anthropic.

Apple will also introduce a new AI-powered tool for user interface testing, a challenging part of software development.

Finally, on a somewhat depressing note, Gurman notes that Apple will “quietly rebrand several existing features in apps like Safari and Photos as ‘AI-powered,’” possibly to make it seem like the Apple Intelligence team has been more productive than it actually has.